Table of Contents (Start)

- Topics

- Introducing SevOne

- Login

- Startup Wizard

- Dashboard

- Global Search - Advanced Search

- Report Manager

- Report Attachment Wizard

- Report Properties

- Report Interactions

- Instant Graphs

- TopN Reports

- Alerts

- Alert Archives

- Alert Summary

- Instant Status

- Status Map Manager

- Edit Maps

- View Maps

- FlowFalcon Reports

- NBAR Reports

- Logged Traps

- Unknown Traps

- Trap Event Editor

- Trap Destinations

- Trap Destination Associations

- Policy Browser

- Create and Edit Policies

- Webhook Definition Manager

- Threshold Browser

- Create and Edit Thresholds

- Probe Manager

- Discovery Manager

- Device Manager

- New Device

- Edit Device

- Object Manager

- High Frequency Poller

- Device Summary

- Device Mover

- Device Groups

- Object Groups

- Object Summary

- Object Rules

- VMware Browser

- AWS Plugin

- Azure Plugin (Public Preview)

- Calculation Plugin

- Database Manager

- Deferred Data Plugin

- DNS Plugin

- HTTP Plugin

- ICMP Plugin

- IP SLA Plugin

- JMX Plugin

- NAM

- NBAR Plugin

- Portshaker Plugin

- Process Plugin

- Proxy Ping Plugin

- SDWAN Plugin

- SNMP Plugin

- VMware Plugin

- Web Status Plugin

- WMI Plugin

- xStats Plugin

- Indicator Type Maps

- Device Types

- Object Types

- Object Subtype Manager

- Calculation Editor

- xStats Source Manager

- User Role Manager

- User Manager

- Session Manager

- Authentication Settings

- Preferences

- Cluster Manager

- Maintenance Windows

- Processes and Logs

- Metadata Schema

- Baseline Manager

- FlowFalcon View Editor

- Map Flow Objects

- FlowFalcon Views

- Flow Rules

- Flow Interface Manager

- MPLS Flow Mapping

- Network Segment Manager

- Flow Protocols and Services

- xStats Log Viewer

- SNMP Walk

- SNMP OID Browser

- MIB Manager

- Work Hours

- Administrative Messages

- Enable Flow Technologies

- Enable JMX

- Enable NBAR

- Enable SNMP

- Enable Web Status

- Enable WMI

- IP SLA

- SNMP

- SevOne Data Publisher

- Quality of Service

- Perl Regular Expressions

- Trap Revisions

- Integrate SevOne NMS With Other Applications

- Email Tips and Tricks

- SevOne NMS PHP Statistics

- SevOne NMS Usage Statistics

- Glossary and Concepts

- Map Flow Devices

- Trap v3 Receiver

- Guides

- Quick Start Guides

- AWS Quick Start Guide

- Azure Quick Start Guide (Public Preview)

- Data Miner Quick Start Guide

- Flow Quick Start Guide

- Group Aggregated Indicators Quick Start Guide

- IP SLA Quick Start Guide

- JMX Quick Start Guide

- Metadata Quick Start Guide

- RESTful API Quick Start Guide

- Self-monitoring Quick Start Guide

- SevOne NMS Admin Notifications Quick Start Guide

- SNMP Quick Start Guide

- Synthetic Indicator Types Quick Start Guide

- Topology Quick Start Guide

- VMware Quick Start Guide

- Web Status Quick Start Guide

- WMI Quick Start Guide

- xStats Quick Start Guide

- xStats Adapter - Accedian Vision EMS (TM) Quick Start Guide

- Deployment Guides

- Automated Build / Rebuild (Customer) Instructions

- Generate a Self-Signed Certificate or a Certificate Signing Request

- SevOne Best Practices Guide - Cluster, Peer, and HSA

- SevOne Data Platform Security Guide

- SevOne NMS Implementation Guide

- SevOne NMS Installation Guide - Virtual Appliance

- SevOne NMS Advanced Network Configuration Guide

- SevOne NMS Installation Guide

- SevOne NMS Port Number Requirements Guide

- SevOne NMS Upgrade Process Guide

- SevOne Physical Appliance Pre-Build BIOS and RAID Configuration Guide

- SevOne SAML Single Sign-On Setup Guide

- Cloud Platforms

- Other Guides

- Quick Start Guides

SevOne Best Practices Guide - Cluster, Peer, and HSA

SevOne NMS Documentation

All documentation is available from the IBM SevOne Support customer portal.

© Copyright International Business Machines Corporation 2024.

All right, title, and interest in and to the software and documentation are and shall remain the exclusive property of IBM and its respective licensors. No part of this document may be reproduced by any means nor modified, decompiled, disassembled, published or distributed, in whole or in part, or translated to any electronic medium or other means without the written consent of IBM.

IN NO EVENT SHALL IBM, ITS SUPPLIERS, NOR ITS LICENSORS BE LIABLE FOR ANY DAMAGES, WHETHER ARISING IN TORT, CONTRACT OR ANY OTHER LEGAL THEORY EVEN IF IBM HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES, AND IBM DISCLAIMS ALL WARRANTIES, CONDITIONS OR OTHER TERMS, EXPRESS OR IMPLIED, STATUTORY OR OTHERWISE, ON SOFTWARE AND DOCUMENTATION FURNISHED HEREUNDER INCLUDING WITHOUT LIMITATION THE WARRANTIES OF DESIGN, MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE, AND NONINFRINGEMENT.

IBM, the IBM logo, and SevOne are trademarks or registered trademarks of International Business Machines Corporation, in the United States and/or other countries. Other product and service names might be trademarks of IBM or other companies. A current list of IBM trademarks is available on ibm.com/trademark.

About

This document provides conceptual information about your SevOne cluster implementation, peers, and Hot Standby Appliance (HSA) technologies. The intent is to help you prepare and troubleshoot a multi-appliance SevOne NMS cluster. Please refer to SevOne NMS Installation Guide on how to rack up SevOne appliances and SevOne NMS Implementation Guide on how to begin using SevOne Network Management System (NMS).

In this guide if there is,

-

[any reference to master] OR

-

[[if a CLI command contains master] AND/OR

-

[its output contains master]],

it means leader.

And, if there is any reference to slave, it means follower.

In a cluster that contains mixed sized appliances, the largest hardware capacity appliance should be the Cluster Leader. There is RAM overhead exerted on the Cluster Leader based on its additional responsibilities. Due to this, SevOne strongly advises you to implement this recommendation.

Starting SevOne NMS 6.7.0, MySQL has moved to MariaDB 10.6.12 .

SevOne Scalability

SevOne NMS is a scalable system that is able to suit any network needs. SevOne appliances work together in an environment that uses a transparent peer-to-peer technology. You can peer multiple SevOne appliances together to create a SevOne cluster that can monitor an unlimited number of network elements and flow data. The number of peers in a cluster must not exceed 200 peers; this includes the HSAs. This limit is due to MySQL replication maintainer.

There are several models of the appliances that run the SevOne software.

-

PAS - Performance Appliance System (PAS) appliances can be rated to monitor up to 200,000 network elements.

-

DNC - Dedicated NetFlow Collector (DNC) appliances monitor flow technologies. Standard DNC supports up to 80K flows per second whereas, DNC HF supports up to 200K flows per second.

-

HSA - Hot Standby Appliances (HSA) enable you to create a pair of appliances that act as one peer to provide redundancy.

SevOne appliances scale linearly to monitor the world’s largest networks. Each SevOne appliance is both a collector and a reporter. In a multi-peer cluster, all appliances are aware of which peer monitors each device and can efficiently route data requests to the user. The system is flexible enough to enable indicators from different devices to appear on the same graph when different SevOne peers monitor the devices.

The peer-to-peer architecture not only scales linearly, without loss of efficiency as you add additional peers, but actually gets faster. When a SevOne peer receives a request from one of its peers, it processes the request locally and sends the requesting peer only the data it needs to complete the report. Thus if a report spans multiple peers, they all work on the report simultaneously to retrieve the results much faster than any single appliance can.

You can create redundancy for each peer with a Hot Standby Appliance that works in tandem with the active appliance to create a peer appliance pair. If the active appliance in the Hot Standby Appliance peer pair fails, the passive appliance in the peer pair takes over. The passive appliance in the Hot Standby Appliance peer pair maintains a redundant copy of all poll data and configuration data for the peer pair. If a failover occurs, the transition from passive appliance to active appliance is transparent to all users and all polling and reporting continues seamlessly.

Each SevOne cluster has a Cluster Leader peer. The cluster leader peer is the SevOne appliance that stores the master copy of Cluster Manager settings, security settings, and other global settings in the config database. All other active peers in your SevOne cluster pull the configuration data from the leader peer config database. Some security settings and device edit functions are dependent upon the communication between the active peers in the cluster and the cluster manager peer. The cluster manager appliance hardware and software is no different from any other peer appliance so you can designate any appliance to be the cluster manager. Considerations for the cluster leader are geographic location and data center stability that affect latency and power supply which should be considered for any computer network implementation.

From the user point of view, there is only one peer because each peer in the cluster presents all data, no matter which peer collects it, with no reference to the peer that collects the data. You can log on to any SevOne peer in your cluster and retrieve data that spans any or all devices in your network.

All SevOne communication protocols assume a single hop adjacency which means that a request or replication must be directly sent to the IP address of the destination SevOne peer and cannot be routed in any way by the members of the cluster.

All peers must be able to communicate with the Cluster Leader. Peers can only report and move devices to peers that it can communicate with. The SevOne cluster is designed to be able to gracefully operate in a degraded state when one or more of the peers are disconnected from the rest of the cluster.

-

A peer that cannot communicate with the cluster leader peer can run reports that return results for all data available on the peers with which it maintains communication.

-

A peer that cannot communicate with the cluster leader cannot make any configuration changes for the duration of being disconnected.

-

The peers in the cluster that remain in communication with the cluster leader can make configuration changes and can run report for all data available on peers with which they maintain communication.

There are two types of SevOne cluster deployment architectures:

-

Full Mesh - (Recommended) All peers can talk to each other. Recommended for enterprises and service providers to monitor their internal network.

-

Hub and Spoke - (Not Recommended due to many caveats, but supported) All peers can communicate with the Cluster Leader. Peers can only report and move devices to peers that it can communicate with. S ubsets of the other interconnected peers can be defined. Useful for managed service providers. Hub and spoke implementations provide the following:

-

Reports run from the cluster leader peer return fully global result sets.

-

Reports run from a peer in a partially connected subset of peers return result sets from itself, the cluster leader peer, and any other peers with which the peer can directly communicate.

-

Most configuration changes at both the peer level and the cluster level can be accomplished from all peers.

-

Updates and other administrative functions that affect all peers must be run from the cluster leader peer.

-

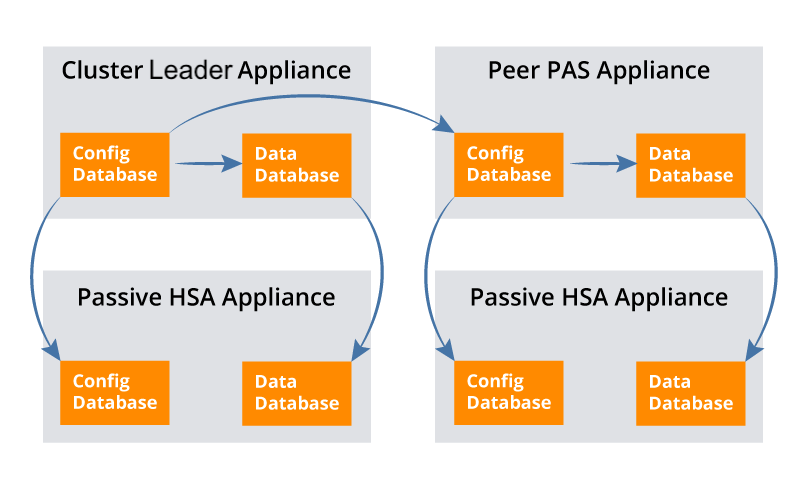

Database Replication Explanation

The SevOne NMS application peer-to-peer architecture has two fundamental databases.

-

Config Database - The config database stores configuration settings such as cluster settings, security settings, device settings, etc. SevOne NMS saves the configuration settings you define (on any peer in the cluster) in the config database on cluster leader peer. All active appliances in the cluster pull config database changes from the cluster leader peer's config database. Each passive appliance in a Hot Standby Appliance peer pair pulls its active appliance's config database to replicate the config database onto the passive peer.

-

Data Database - The data database stores a copy of the config database plus all poll data for the devices/objects that the peer polls. The config database on an active appliance replicates to the data database on the appliance. Each passive appliance in a Hot Standby Appliance peer pair pulls the active appliance's data database to replicate the data database on the passive appliance.

Peer Port Assignments

Please refer to SevOne NMS Port Number Requirements Guide for details on all required port numbers.

REST API Ports

The following port should be open for REST API depending on the version:

-

REST API version 1.x - port 8080

-

REST API version 2.x - port 9090

The appropriate port must be open for access across all SevOne NMS peers in the cluster to ensure proper operation.

To check the REST API version, SSH into your SevOne NMS appliance or cluster and execute the following command.

$ SevOne-show-versionManage Peers

In a single appliance/single peer cluster implementation, all relevant information (cluster leader, discovery, alerts, reports, etc.) are all local to the one appliance. For a single peer cluster the SevOne appliance ships with the following configuration:

-

Name: SevOne Appliance

-

Leader: Yes

-

IP: 127.0.0.1

In a multi-peer cluster implementation, one appliance in the cluster is the cluster leader peer. The cluster leader peer receives configuration data such as cluster settings, security settings, device peer assignments, archived alerts, and flow device lists. The cluster leader peer software is identical to the software on all other peers which enables you to designate any SevOne NMS appliance to be the cluster leader peer. After you determine which peer is to be the cluster leader then that peer remains the leader of the cluster and only SevOne Support engineers can change the cluster leader peer designation.

All active peers in the cluster replicate the configuration data from the cluster leader peer. All active SevOne NMS appliances in a cluster are peers of each other. One of the appliances in a Hot Standby Appliance peer pair is a passive appliance. Please refer to section Hot Standby Appliances on how to include Hot Standby Appliance peer pairs in your cluster.

All active peers perform the following actions.

-

Provide a full web interface to the entire SevOne NMS cluster.

-

Update the cluster leader peer with configuration changes.

-

Pull config database changes from the cluster leader peer.

-

Communicate with other peers to get non-local data.

-

Discover and/or delete the devices you assign to the peer.

-

Poll data from the objects and indicators on the devices you assign to the peer.

When you add a network device for SevOne NMS to monitor, you assign the device to a specific SevOne NMS peer. The assigned peer lets the cluster mater know that it (the assigned peer) is responsible for the device. The cluster leader maintains the device assignment list in the config database which is replicated to the other peers in the cluster. From this point forward, all modifications to the device, including discovery, polling, and deletion are the responsibility of the SevOne NMS peer to which you assign the device. The peer collects and stores all data for the device. This is in direct contrast to the way that other network management systems collect data. They collect data remotely and then ship the data back to a leader server for storage. SevOne NMS stores all local data on the local peer which enables for greater link efficiency and speed.

Add Peers

For a new appliance, follow the steps in the SevOne NMS Installation Guide to rack up the appliance and to assign the appliance an IP address. The SevOne NMS Implementation Guide describes the steps to log on to the new appliance. On the Startup Wizard, select the Add This Appliance to an Existing SevOne NMS Cluster and click Next to navigate to the Cluster Manager at the appliance level on the Integration tab.

You can access the Cluster Manager from the navigation bar. Click the Administration menu and select Cluster Manager. From the Cluster Manager, click in the cluster hierarchy on the left side next to the peer to add/move to display the peer's IP address. Click on the IP address and then select the Integration tab on the right side.

-

All data on this peer/appliance will be deleted.

-

You need to know the name of this peer (appears in the cluster hierarchy on the left side of the Cluster Manager).

-

You need to know the IP address of this appliance (appears in the cluster hierarchy on the left side of the Cluster Manager).

-

You need to know the temporary integration password of the peer/appliance (appears besides the countdown timer).

-

You need to be able to access the Cluster Manager on a SevOne NMS peer that is already in the cluster to which you intend to add this appliance.

-

If you do not complete the steps within ten minutes, you must start again at step 1. Click Allow Peering … on this tab to queue this peer/appliance for peering within the following ten minutes.

After you click Allow Peering on this tab, you will have ten minutes to perform the following steps from a peer that is already in the cluster to which you intend to add this peer/appliance.

-

Click Allow Peering on the Integration tab on the appliance you are adding.

-

Log on to the peer in the destination cluster as a System Administrator.

-

Go to the Cluster Manager (Administration > Cluster Manager).

-

At the Cluster level select the Peers tab.

-

Click Add Peer to display a pop-up.

-

Enter the Peer Name, the IP Address, and the temporary integration password of the peer/appliance you are adding. This password can be found at the bottom of the Integration tab on the peer being added, beside the countdown timer. For example, Your appliance is currently in INCORPORATE mode. Your temporary password is 0fdf318beaed7e6e. You have 9:23 remaining until incorporate mode is turned off.

-

On the pop-up, click Add Peer:

-

All data on the peer/appliance you are adding is deleted.

-

Do not do anything on the peer you are adding until a Success message appears on the peer on which you click Add Peer.

-

You can continue working and performing business as usual on all peers that are already in the cluster.

-

The new peer appears on the Peers tab in the destination cluster with a status message. Click Refresh to update the status.

-

The new peer appears in the cluster hierarchy on the left.

-

-

After the Success message appears on the peer in the destination cluster, you can go the peer you just added and the entire cluster hierarchy to which you added the peer should appear on the left.

-

You can use the Device Mover to move devices to the new peer.

If the integration fails, click View Cluster Logs on the Peers tab on the peer that is in the destination cluster to display a log of the integration messages.

Click Clear Failed to remove failed attempts from the list. Failed attempts are not automatically removed from the list which enables you to navigate away from Peers tab during the integration.

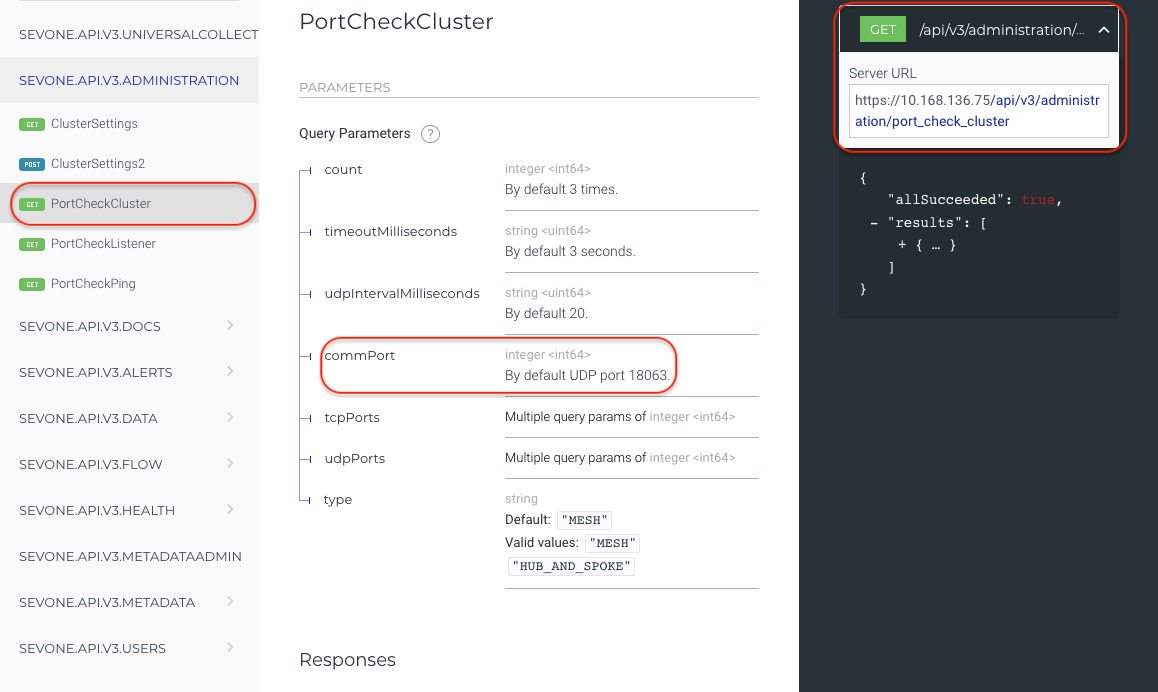

Port Checker

Ports can be checked by using a standalone Command Line Interface utility or from the SOA REST API. It allows you to check the status of a predefined list of pings (specifically, ICMP ping), TCP ports, and UDP ports.

Command Line Interface

The following command provides help on how to check the peers in a cluster from SevOne NMS > Command Line Interface utility.

$ SevOne-act check peers --helpTo test a connection to a specific remote peer which is not part of the cluster, you must be in incorporate mode. Please use --peer-ips option.

$ SevOne-act check peers --enable-logging true --peer-ips <Remote Peer IP address>$ SevOne-act check peers --enable-logging true --peer-ips 10.168.136.75$ SevOne-act check peers --enable-logging true --udp-ports 53 \ --tcp-ports 22 --port-timeout 3000 --port-try-count 4 \ --strip-success falseSOA REST API

To perform administrative checks, enter https://<IP address>/api/v3/docs in your browser > select SEVONE.API.V3.ADMINISTRATION. For example, for a cluster-wide mesh port check between all peers, click on PortCheckCluster request. By default, UDP port 18063 is used internally - this port must be open otherwise all UDP ports will appear blocked. Alternatively, specify a communication port, commPort, which is open and unused.

Please refer to SevOne NMS Port Number Requirements Guide for the expected ports to be in open status.

Update Default Port List

To update the default port list to include the new ports or to remove the old ones, modify the following MySQL tables.

-

net.port_checker_tcp

-

net.port_checker_udp

Troubleshooting

When addition to a new peer fails, execute the following command to return a list of ports failing between the peers.

The p eer being checked/added must be in incorporate mode.

$ SevOne-act check peers --enable-logging true --peer-ips <Remote Peer IP address>Once you have the list of failing ports between the peers, open them in your firewall. Or, although not recommended, you may remove the failing ports from the default port list if necessary.

Port checking can be disabled by removing all entries from MySQL database. For details, please see Update Default Port List . When port checking is disabled and if you attempt to add a new peer, it will disable all port checking and the peer may peer improperly. Also, all port checks in the future will succeed even if they should not.

Remove Peer

The Cluster Manager also provides a Remove Peer button. Caution should be used when considering the use of this feature.

When a peer is removed, /etc/hosts file is recreated. This results in user losing all the host entries in the hosts file. Please proceed with caution!

You must contact SevOne Support to re-add the peer to the cluster. Remember: The Add Peer functionality removes all existing data on the appliance.

This feature should only be considered when the peer is acting in a way that is adverse to the overall cluster functionality or performance. In a Hot Standby Appliance peer pair, if you click this button you remove both appliances in the peer.

The following statements assume that the peer is still functional enough to continue to appropriately run the SevOne NMS software.

-

All devices polled by the peer are not removed from the peer and are not distributed to other peers. These devices are inaccessible from the peers that remain in the cluster.

-

Data is not removed from the peer you remove from the cluster.

-

After you agree to the confirmation prompts, there is no way to cancel the peer remove process.

-

The removed peer no longer appears in the hierarchy on the Cluster Manager.

-

The peer removal is bi-directional which means the removed peer is excised from the net.peers table and the removed peer attempts to change its cluster leader to itself. If the removed peer is partially or totally unresponsive, this function restricts MySQL access to remove the affected peer from the cluster leader.

Hot Standby Appliances

SevOne appliances can collect gigabytes of data each day. You can create redundancy for each peer with a Hot Standby Appliance to create a peer that is made up of a pair of appliances. If the active appliance in the peer pair fails there is no significant loss of poll data. The passive appliance in the peer pair assumes the role of the active appliance in the peer pair. Having a peer pair in a peer to peer cluster implementation has its own terminology.

Terminology

-

Primary Appliance - Implemented to be the active, normal, polling appliance. If the primary appliance fails, it is still the primary appliance but it becomes the passive appliance.

-

Secondary Appliance - Implemented to be the passive appliance in a Hot Standby Appliance peer pair. If the active appliance fails, it is still the secondary appliance but it assumes the active role.

-

Active Appliance - The currently polling appliance. Upon initial set up the primary appliance is the active appliance. If the primary appliance fails, the secondary appliance becomes the active appliance in the peer pair.

-

Passive Appliance - The appliance that currently replicates from the active appliance. Upon initial set up the secondary appliance is the passive appliance.

-

Neighbor - The other appliance in the peer pair. The primary appliance’s neighbor is the secondary appliance and vice versa.

-

Fail Over – From the perspective of the active appliance, fail over occurs when the currently passive appliance assumes the active role.

-

Take Over – From the perspective of the passive appliance, take over occurs when the passive appliance assumes the active role.

-

Split Brain – When both appliances in a peer pair are active or both appliances in a peer pair are passive this is known as split brain. Split brain can occur when the communication between the appliances is interrupted and the passive appliance becomes active. When an administrative user logs on, an administrative message appears to let you know that the split brain condition exists. Please refer to section Troubleshoot Cluster Management for details.

Both appliances in the peer pair must have the same hardware/software configuration to prevent performance problems in the event of a failover (for example, a 60K HSA for a 60K PAS). Each Hot Standby Appliance peer pair can have only the two appliances in the peer pair. A Hot Standby Appliance cannot have a Hot Standby Appliance attached to it.

In a Hot Standby Appliance peer pair implementation, the role of the active appliance in the peer pair is to:

-

Provide a full web interface to the entire SevOne NMS cluster.

-

Update the leader peer with configuration changes.

-

Pull config database changes from the leader peer.

-

Communicate with other peers to get any non-local data.

-

Discover and/or delete the devices you assign to the peer.

-

Poll data from the assigned devices.

The role of the passive appliance in the peer pair is to:

-

Replicate the config database information and the data database information from the active appliance.

-

Switch to the active role when the current active appliance is not reachable.

An HSA implementation creates an appliance relationship that is designed to have the passive appliance receive a continuous stream of database replication (commonly known as binary logs of database actions) from the active appliance neighbor. The passive appliance replays this stream and makes precisely the same changes that the active appliance made (i.e., record collected data, add a new device configuration, delete a monitored device and all historic data, etc.). The passive appliance performs a continuous heartbeat check to the active appliance. In the event that the passive appliance determines that the active appliance’s heartbeat has gone away for any reason for a specified time period (referred to as the minimum dead time), the passive appliance assumes that the active appliance has indeed suffered a catastrophic failure and the passive appliance takes over polling at that point for its respective devices and notifies the cluster leader that it has taken over for what was the active appliance.

The passive appliance in the Hot Standby Appliance peer pair can take over for the active appliance at any time. Any changes you make to the active appliance (including settings updates, new polls, or device deletions) are replicated to the passive appliance as frequently as possible.

Hot Standby Appliance Peer Pair Ports

The primary appliance and the secondary appliance in a Hot Standby Appliance peer pair need to communicate with each other to maintain a consistent environment. The appliances need to have the following ports open between each other:

-

TCP 3306 - MySQL replication

-

TCP 3307 - MySQL replication

-

TCP 5050 - Active/passive communication

Add an Appliance to Create a Hot Standby Appliance Peer Pair

There are two ways to implement a Hot Standby Appliance peer pair: VIP and non-VIP. Each has its advantages and disadvantages, and each works best in certain implementations.

Virtual IP Configuration (VIP Configuration)

VIP Configuration requires each appliance to have two Ethernet cards and a dedicated IP address. The two appliances share a virtual IP, address which is the IP address of the appliance that is currently active. This works when the two appliances are on the same subnet.

Advantages

-

Transparent to the end user; if a failover occurs, the IP address does not change.

-

Appliances are not separated by a firewall.

-

Replicated data is restricted to a subnet.

Disadvantages

-

Requires three IP addresses.

-

Only works if the two appliances are in the same subnet. Barring complicated routing this set up generally means that the two appliances are close to each other so if the building goes down, both appliances go down.

In a VIP configuration, the access lists necessary for SNMP, ICMP, port monitoring, and other polled monitoring are only set for the virtual IP address.

VIP Configuration requires you to set up two interfaces.

-

eth0: - This is the virtual interface and should be the same on both appliances. This interface is brought up and down appropriately by the system.

-

eth1: - This is the administrative interface for the appliance. The system does not alter this interface.

The Cluster Manager provides a Peer Settings tab to enable you to view the Primary, Secondary, and Virtual IP addresses in a VIP configuration Hot Standby Appliance peer pair.

-

Primary Appliance IP Address - The administrative IP address for the primary appliance (eth1).

-

Secondary Appliance IP Address - The administrative IP address for the secondary appliance (eth1).

-

Virtual IP Address - The virtual IP address for the peer pair (eth0).

Non-VIP Configuration

Non-VIP Configuration requires each appliance to have an IP address and be able to communicate with each other. In the event of a failover or takeover when the appliances switch roles the IP address of the peer pair changes accordingly.

Advantages

-

Requires two IP addresses.

-

Appliances do not need to be on the same subnet.

Disadvantages

-

IP address of peer pair changes when the appliances failover.

You need to be aware that you must check two IP addresses or include a DNS load balancer to update the DNS record.

-

A firewall separates the appliances.

-

Data replicates across a WAN.

In a non-VIP configuration, all access lists need to include the IP addresses of both appliances in the event of a failover.

Non-VIP Configuration requires you to set up only one interface.

-

eth0: - This is the administrative interface for the appliance. The system does not alter this interface.

The Cluster Manager provides a Peer Settings tab to enable you to view the Primary and Secondary IP addresses in a non-VIP configuration Hot Standby Appliance peer pair.

-

Primary Appliance IP Address - The administrative IP address for the primary appliance (eth0).

-

Secondary Appliance IP Address - The administrative IP address for the secondary appliance (eth0).

-

Virtual IP Address - (this field should be blank).

Note: If you do not specify either the primary appliance IP address or the secondary appliance IP address, no active/passive actions take place.

SevOne-hsa-add

The SevOne-hsa-add command line script enables you to add a Hot Standby Appliance to create a peer pair in your SevOne cluster. The Hot Standby Appliance must be a clean box that contains no data in the data database or the config database.

-

Follow the steps in the SevOne NMS Installation Guide to rack up the appliance and to perform the steps in the config shell to give the Hot Standby Appliance an IP address.

-

On the Hot Standby Appliance, login to the SevOne NMS User Interface. To access the Cluster Manager from the navigation bar, click the Administration menu and select Cluster Manager. Click on the expand arrow in the Cluster Navigation panel and click on the specific appliance IP address to be added. Select the Integration tab on the appliance you are adding and then, click Allow Peering. Once you click on Allow Peering you will have 10 minutes to complete the next steps.

-

On the Primary appliance in the peer pair, access the command line prompt and enter: SevOne-hsa-add.

-

Follow the prompts to enter the Hot Standby Appliances IP address and the applicable password.

If you have a scenario where adding HSA has failed during the masterslave console, format follower. You may execute the following steps as a workaround.

-

Using a text editor of your choice, edit /etc/cron.d/mode file.

-

Search for the line containing discover-netflow.

-

Comment this line by adding a # at the start of this line.

-

Save /etc/cron.d/mode file.

-

Add HSA.

-

Using a text editor of your choice, edit /etc/cron.d/mode file again.

-

Search for the line containing discover-netflow.

-

Uncomment this line by removing the # that is at the start of this line.

-

Save /etc/cron.d/mode file.

DNC Hot Standby Appliance Implementation

When your cluster requires a Hot Standby Appliance for a Dedicated NetFlow Collector there are a few considerations. During normal operations, the active appliance collects raw flow data and aggregates flow data for all FlowFalcon views that you define to use aggregated flow data. When a user runs a FlowFalcon report that uses an aggregated view, the report draws from the aggregated flow database on the active appliance. The aggregated flow data from the active appliance is replicated to the passive appliance. Raw flow data is not replicated from the active appliance to the passive appliance.

Like the HSA implementation for a PAS, there are two ways to implement a HSA peer pair for a DNC: Virtual IP Address (VIP) and non-VIP.

Virtual IP Address Configuration (VIP)

To reiterate, VIP configuration requires each appliance to have two Ethernet cards and a dedicated IP address. The two appliances share a virtual IP, address which is the IP address of the appliance that is currently active.

Advantages

-

Transparent to the end user; if a failover occurs, the IP address does not change.

-

Appliances are not separated by a firewall.

-

Replicated data is restricted to a subnet.

Disadvantages

-

Requires three IP addresses.

-

Only works if the two appliances are in the same subnet.

VIP Configuration requires you to set up two interfaces.

-

eth0: - This is the virtual interface and should be the same on both appliances. This interface is brought up and down appropriately by the system.

-

eth1: - This is the administrative interface for the appliance. The system does not alter this interface.

The Cluster Manager provides a Peer Settings tab to enable you to view the Primary, Secondary, and Virtual IP addresses in a VIP configuration Hot Standby Appliance peer pair.

-

Primary Appliance IP Address - The administrative IP address for the primary appliance (eth1).

-

Secondary Appliance IP Address - The administrative IP address for the secondary appliance (eth1).

-

Virtual IP Address - The virtual IP address for the peer pair (eth0).

Non-VIP Configuration

Non-VIP Configuration requires each appliance to have an IP address and be able to communicate with each other. You must configure the devices to send all raw flow data to the IP address of both appliances so that raw flows that are not replicated are still available from both appliances. The passive appliance does not store or process raw flow data unless there is a failover.

Advantages

-

Requires two IP addresses.

-

Appliances do not need to be on the same subnet.

Disadvantages

-

IP address of peer pair changes when the appliances failover.

-

Devices must be configured to send flow data to both IP addresses.

You need to be aware that you must check two IP addresses or include a DNS load balancer to update the DNS record.

-

A firewall separates the appliances.

-

Data replicates across a WAN.

In a non-VIP configuration, all access lists need to include the IP addresses of both appliances in the event of a failover.

Non-VIP Configuration requires you to set up only one interface.

-

eth0: - This is the administrative interface for the appliance. The system does not alter this interface.

Note: If you do not specify either the primary appliance IP address or the secondary appliance IP address, no active/passive actions take place.

Technical Details

The Bandwidth requirements are as follows using a DNC1000 for the example.1000 interfaces x 10 aggregated FlowFalcon views x 200 results x 2 directions = 4,000,000 records every minute.200 bytes per record means 800,000,000 bytes per minutes.That is 3.6 Gigabits per minute. Or 60 Mb per second.The connection must sustain at least a 60Mb TCP connection.

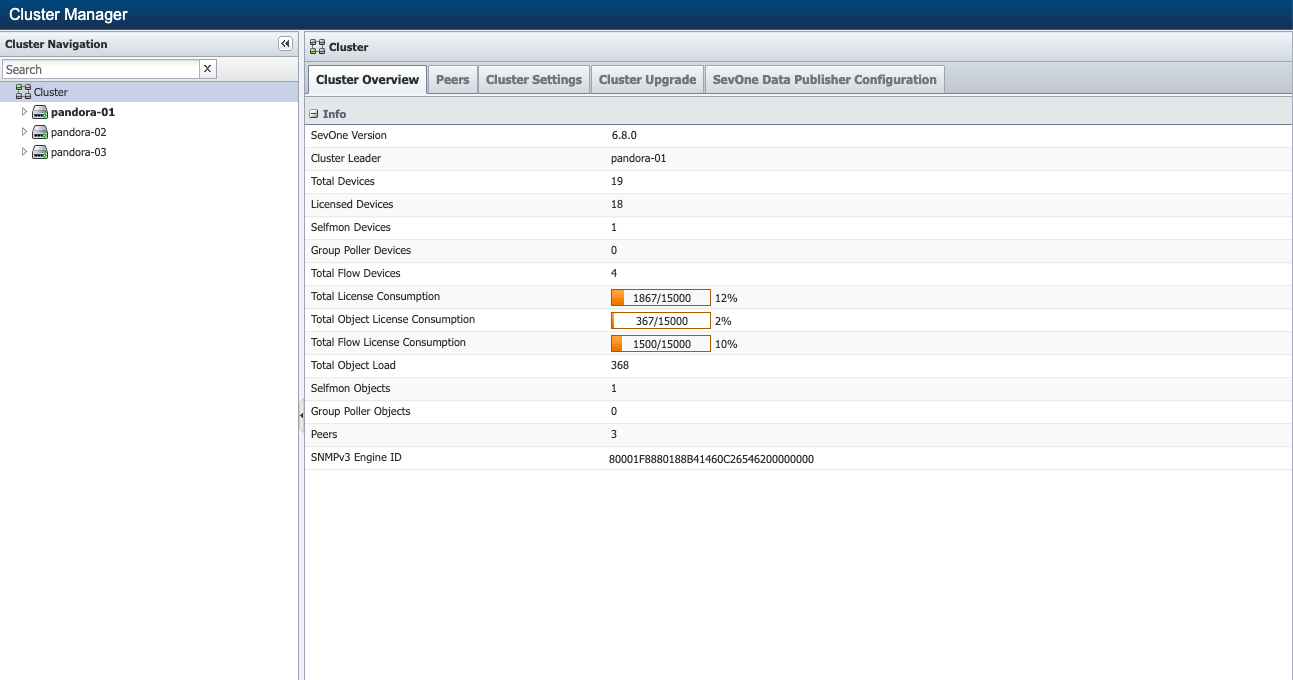

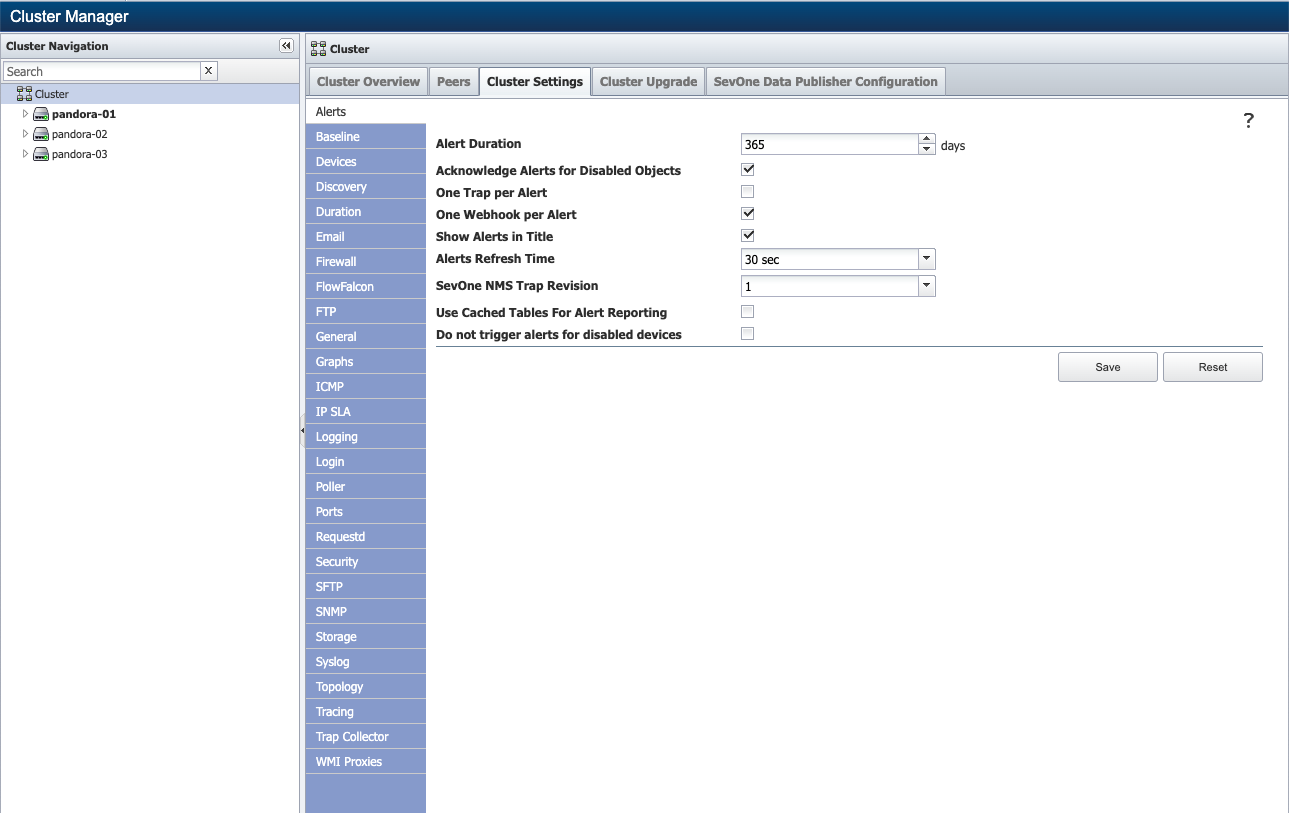

Troubleshoot Cluster Management

The Cluster Manager displays statistics and enables you to define application settings. The Cluster Manager enables you to integrate additional SevOne NMS appliances into your cluster, to resynchronize the databases, and to change the roles of Hot Standby Appliances (fail over, take over, rectify split brain). The following is a subsection of the comprehensive Cluster Manager documentation. See the SevOne NMS System Administration Guide for additional details.

To access the Cluster Manager from the navigation bar, click the Administration menu and select Cluster Manager.

The left side enables you to navigate your SevOne NMS cluster hierarchy. When the Cluster Manager appears, the default display is the Cluster level with the Cluster Overview tab selected.

-

Cluster Level - The Cluster level enables you to view cluster wide statistics, to view statistics for all peers in the cluster and to define cluster wide settings.

-

Peer Level - The Peer level enables you to view peer specific information and to define peer specific settings. The cluster leader peer name displays at the top of the peer hierarchy in bold font and the other peers display in alphabetical order.

-

Appliance Level - Click to display appliance level information including database replication details. Each Hot Standby Appliance peer pair displays the two appliances that act as one peer in the cluster. The appliance level provides an Integration tab to enable you to add a new peer to your cluster.

Peer Level Cluster Management

Peer Level – At the Peer level, on the Peer Settings tab, the Primary/Secondary subtab enables you to view the IP addresses for the two appliances that act as one SevOne NMS peer in a Hot Standby Appliance (HSA) peer pair implementation. The Primary appliance is initially set up to be the active appliance. If the Primary appliance fails, it is still the Primary appliance but its role changes to the passive appliance. The Secondary appliance is initially set up to be the passive appliance. If the Primary appliance fails, the Secondary appliance is still the Secondary appliance but it becomes the active appliance. You define the appliance IP address upon initial installation and implementation. See the SevOne NMS Installation Guide and SevOne NMS Implementation Guide for details.

-

The Primary Appliance IP Address field displays the IP address of the primary appliance.

-

The Secondary Appliance IP Address field displays the IP address of the secondary appliance.

-

The Virtual IP Address field appears empty unless you implement the primary appliance and the secondary appliance to share a virtual IP address (VIP HSA implementation).

-

The Failover Time field enables you to enter the number of seconds for the passive appliance to wait for the active appliance to respond before the passive appliance takes over. SevOne NMS pings every 2 seconds and the timeout for a ping is 5 seconds.

Appliance Level Cluster Management

Appliance Level – At the Appliance level the appliance IP address displays. For a Hot Standby Appliance peer pair implementation two appliances appear.

-

The Primary appliance appears first in the peer pair.

-

The Secondary appliance appears second in the peer pair.

-

The passive appliance in the peer pair displays (passive).

-

The active appliance that is actively polling does not display any additional indicators.

Click on an appliance and  appears in the upper-right corner. Click to display options that are dependent on the appliance you select in the hierarchy on the left side.

appears in the upper-right corner. Click to display options that are dependent on the appliance you select in the hierarchy on the left side.

-

Select Device Summary to access the Device Summary for the appliance. When there are report templates that are applicable for the device, a link appears to the Device Summary along with links to the report templates.

-

Select Fail Over to have the active appliance in a Hot Standby Appliance peer pair become the passive appliance in the peer pair. This option appears when you select the active appliance in a Hot Standby Appliance peer pair in the hierarchy.

-

Select Take Over to have the passive appliance in a Hot Standby Appliance peer pair become the active appliance in the peer pair. This option appears when you select the passive appliance in a Hot Standby Appliance peer pair in the hierarchy.

-

Select Resynchronize Data Database to have an active appliance pull the data from its own config database to its data database or to have the passive appliance in a Hot Standby Appliance peer pair pull the data from the active appliance's data database. This is the only option that appears when you select the cluster leader peer's appliance in the hierarchy.

-

Select Resynchronize Config Database to have an active appliance pull the data from the cluster leader peer's config database to the active peer's config database or to have the passive appliance in a Hot Standby Appliance peer pair pull the data from the active appliance's config database.

-

Select Rectify Split Brain to rectify situations when both appliances in a Hot Standby Appliance peer pair think they are active or both appliances think they are passive. For details, please refer to section Split Brain Hot Standby Appliances.

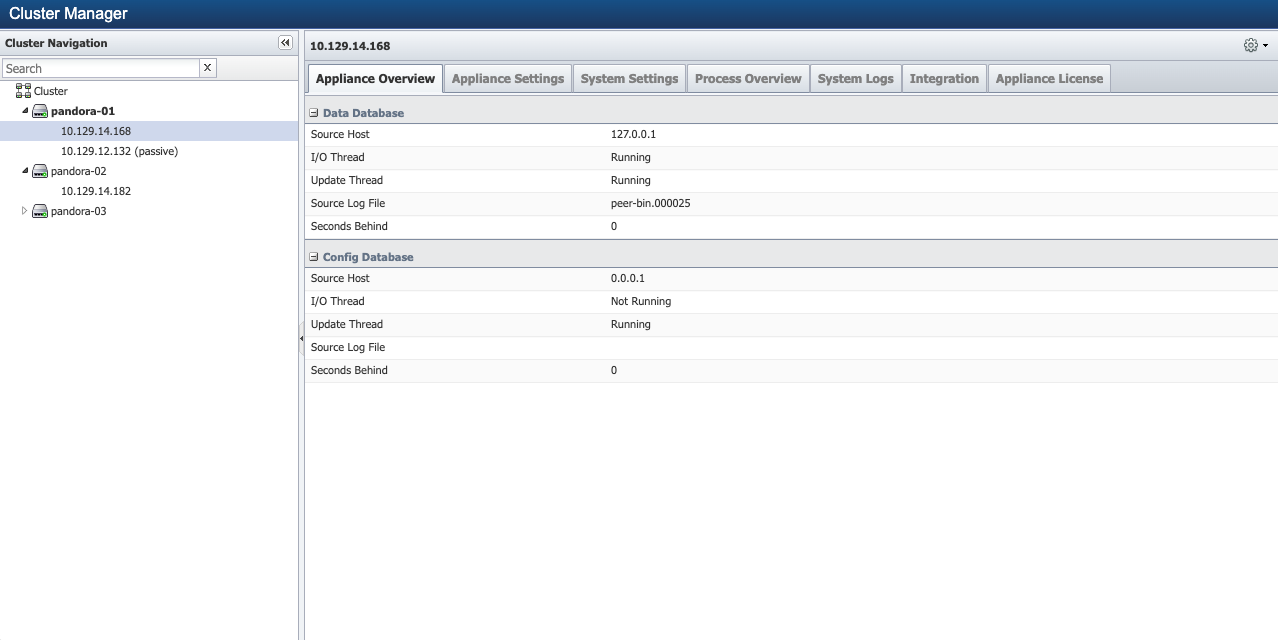

Appliance Overview

Click next to a peer in the cluster hierarchy on the left side, click on the IP address of an appliance, and then select the Appliance Overview tab on the right side to display appliance level information.

Data Database Information

-

Source Host - Displays the IP address of the source from where the appliance replicates the data database. In a single appliance implementation and on an active appliance, this is the IP address of the appliance itself. HSA passive appliance data database replicates from the active appliance data database.

-

I/O Thread - Displays Running when an active appliance is querying its config database for updates for the data database. Displays Not Running when the appliance is not querying the config database. HSA passive appliance data database queries the active appliance data database.

-

Update Thread - Displays Running when the appliance is in the process of replicating the config database to the data database. Displays Not Running when the appliance is not currently replicating to the data database.

-

Source Log File - Displays the name of the log file the appliance reads to determine if it needs to replicate the config database to the data database.

-

Seconds Behind - Displays 0 (zero) when the data database is in sync with the config database or displays the number of seconds that the synchronization is behind.

Config Database Information

-

Source Host - Displays the IP address of the source from where the appliance replicates the config database. In a single appliance implementation and on the cluster leader active appliance, this is the IP address of the appliance itself. HSA passive appliance config database replicates from the active appliance config database.

-

I/O Thread - Displays Running when an active appliance is querying the cluster leader peer config database for updates. Displays Not Running when the appliance is not querying the cluster leader peer config database. HSA passive appliance config database queries the active appliance config database.

-

Update Thread - Displays Running when the appliance is in the process of replicating the config database. Displays Not Running when the appliance is not replicating the config database.

-

Source Log File - Displays the name of the log file the appliance reads to determine if it needs to replicate the config database.

-

Seconds Behind - Displays 0 (zero) when the config database is in sync with the cluster leader peer config database or displays the number of seconds that the synchronization is behind.

Split Brain Hot Standby Appliances

The typical split brain is due to a fail over and then a fail back where the appliance that was active goes down and then comes back up again as the active appliance. The lack of communication from the active appliance causes the passive appliance to become the active appliance, which makes both appliances in the Hot Standby Appliance pair active. A split brain can also be when both appliances are passive.

When a user with an administrative role logs on to SevOne NMS and there is a Hot Standby Appliance peer pair that is in a split brain state, an administrative message appears.

-

Neither appliance in your Hot Standby Appliance peer pair with IP addresses <n> and <n> is in an active state.

-

Both appliances in your Hot Standby Appliance peer pair with IP addresses <n> and <n> are either active or both appliances are passive.

There are two methods to resolve a split brain.

-

SevOne NMS User Interface - Cluster Manager

-

Command Line Interface

SevOne NMS User Interface

The Cluster Manager displays the cluster hierarchy on the left and enables you to rectify split brain occurrences. From the navigation bar click the Administration menu and select Cluster Manager to display the Cluster Manager.

In the cluster hierarchy, click on the name of the peer pair that is the Hot Standby Appliance peer pair to display the two IP addresses of the two appliances that make up the peer pair. The Primary appliance displays first.

-

In a split brain situation where both appliances are active, neither appliance displays Passive.

-

In a split brain situation where both appliances are passive, both appliances display Passive.

Select one of the affected appliances in the hierarchy on the left side. Click the  to display a Rectify Split Brain option.

to display a Rectify Split Brain option.

-

When both appliances think they are passive and you select this option, the appliance for which you select this option becomes the active appliance in the Hot Standby Appliance peer pair.

-

When both appliances think they are active and you select this option, the appliance for which you select this option becomes the passive appliance in the Hot Standby Appliance peer pair.

Example: Select 192.129.14.168 and click Rectify Split Brain to make 192.129.14.168 the passive appliance when both appliances are active.

Command Line Interface Method

Perform the following steps to fix a split brain situation from the command line interface.

-

Log on to the peer that is the Secondary appliance that is in active/leader mode.

-

Enter masterslaveconsole

-

When in masterslaveconsole you can enter Help for a list of commands.

-

To check the appliance type, enter GET TYPE.

-

To check the appliance status, enter GET STATUS.

-

To make the appliance passive, enter the following command BECOME SLAVE (i.e., to become the follower).

-

After you run the command, enter GET JOB STATUS to check the status. This tells you if the process is still running and for how long.

After the process completes, check SevOne-masterslave-status to confirm that the changes were made and that the appliances are in their original configuration.

Scale Specifications

The scale specifications below are based on what SevOne has tested with, approves, and recommends to you.

Users on NMS - Cluster

|

Category |

Max Tested |

|

Number of Users (User Manager) |

10,000 |

|

Number of Users Logged In (Session Manager) |

10,000 Estimated max |

|

Number of User Roles (User Role Manager) |

1,000 |

|

Number of Active Users |

1,000 |

|

Number of Concurrent Users |

400 |

Alerts

|

Category |

Max Tested |

|

Total Active Alerts |

50,000 |

|

Total Archived Alerts Allowed on System |

2 million |

|

Total Archived Alerts Max Display |

50,000 |

|

Maximum Number of Policies |

1,000 |

|

Maximum Number of Threshold processed in 3 Min - Peer |

38,000 |

|

Maximum Number of Status Maps |

1,000 |

Traps - Peer

There are some discernible differences between types of traps unknown / logged / converted to alerts.

|

SevOne-trapd Thread Count |

Maximum Processed |

Maximum Received |

|

Default = 10 |

1,000 |

1,000 |

|

Maximum setting = 99 |

1,500 |

4,000 |